Scaling your Kubernetes PODS to ZERO and BACK with K8s & KEDA

In this post let see how we can dynamically scale your Data Processing or Data Oriented applications to Zero resources and back to the desired capacity only when there is a demand using modern infrastructure tools like K8s(Kubernetes) & KEDA(Kubernetes Event-driven Autoscaling).

Also we will cover the major pitfall of KEDA in Scaling IN/to-Zero and how we can handle it.

Note : Installation of K8s, KEDA & Kafka are out of scope for this post as you can find numerous post/videos covering them.

Why scale to ZERO ?

- Don’t we all want to spend less ?

- Why keep the infrastructure up and running when it’s idle ?

A Simple Application

Let consider a simple demo app for this post. Don’t worry you can easily apply this method/solution to any application of any scale/complexity.

Let’s see how we can scale the Data Processing application to ZERO when there is no data to process and scale to the desired no. of servers/replicas when the messages starts to flow and pile up.

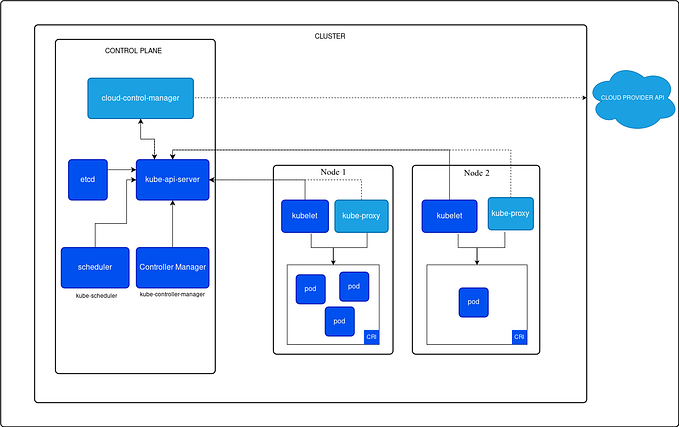

Back to School

Before we go in the solution and implementation, let’s take a step back to understand how K8s & KEDA works together to scale-in/scale-out the pods(application containers).

What is the role of KEDA ?

- KEDA handovers the actual POD scaling to K8s native HPA based on custom metrics.

- And KEDA publishes the necessary custom metrics listenting to Kafka topics and it’s LAG.

How K8s HPA Works ?

Kubernetes HPA(Horizontal Pod Scaler) has a complex Algorithm based on various metric which itself calls for a separate blog post.

To put it simple….

desiredReplicas = ceil[currentReplicas * ( currentMetricValue / desiredMetricValue )]

And the currentMetricsValue is calculated from

currentMetricValue = currentKafkaTopicTotalLag / currentRepicaCount

You can read more about K8s HPA here. With this simple algorithm in mind let’s jump in to solution and implementation.

Enabling KEDA

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: kafka-autoscale-demo

namespace: keda-kafka-demo

spec:

scaleTargetRef:

name: keda-kafka-demo-consumer

pollingInterval: 5

cooldownPeriod: 120

idleReplicaCount: 0

minReplicaCount: 1

maxReplicaCount: 5

triggers:

- type: kafka

metadata:

bootstrapServers: kafka.ashokraja.in:9092

consumerGroup: k8-keda-kafka-demo-consumer

topic: k8s_keda_autoscale_testing

offsetResetPolicy: latest

lagThreshold: '20'

activationLagThreshold: '0'Wow Magic happens and everything works as expected in a perfect world !!!

The message flow is 1:1 or close to it. What it means is consumers are able to consume the messaged at the same rate the producers are producing.

Real World Challenge (All Hell lose !!)

KEDA’s defaults works great when the messages are consumed and processed without any delay by the consumer and are committing back to unblock the Kafka Rebalancing so there are no idle consumers.

But that is not true for an ETL jobs or a workflow or a pipeline which further has to do a lot scrapping/processing/computing/transformation based on the consumed messages. Messages may be produced in a predictable manner.

But the consumer are not consuming or the consumers are committing back to unblock the Kafka Rebalancing so there are no idle consumers.

In such scenario K8s/KEDA/HPA detects that the desired number of pods are way too high not having any knowledge of the consumers which are still processing those messages and starts to Scale Down the pods. And since it doesn’t know which pods are still processing the messages it picks the pod randomly and kills it.

Every problem has a Solution

Solution 1 : Delay K8s HPA Scale Down as long as you desire

advanced:

horizontalPodAutoscalerConfig:

behavior:

scaleDown:

selectPolicy: DisabledComplete Manifest sample:

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: kafka-autoscale-demo

namespace: keda-kafka-demo

spec:

scaleTargetRef:

name: keda-kafka-demo-consumer

pollingInterval: 5

cooldownPeriod: 120

idleReplicaCount: 0

minReplicaCount: 1

maxReplicaCount: 5

advanced:

horizontalPodAutoscalerConfig:

behavior:

scaleDown:

selectPolicy: Disabled

triggers:

- type: kafka

metadata:

bootstrapServers: kafka.ashokraja.in:9092

consumerGroup: k8-keda-kafka-demo-consumer

topic: k8s_keda_autoscale_testing

offsetResetPolicy: latest

lagThreshold: '20'

activationLagThreshold: '0'With this tweak in place the pods are not scaled down unless the Lag = 0. Also pay attention to cooldownPeriod = 120sec , tweak this to your desired value depending on how long a consumer is going to process the messages.

Solution 2 : use ScaledJobs instead of ScaledObjects

For very long running jobs say for some weird reason your consumer will be running for hours or say even days ;).